Source from Microsoft post

New trends in AI, LLMs and application development

The rise of AI and large language models (LLMs) has transformed various industries, enabling the development of innovative applications with human-like text understanding and generation capabilities. This revolution has opened up new possibilities across fields such as customer service, content creation, and data analysis.

As LLMs rapidly evolve, the importance of Prompt Engineering becomes increasingly evident. Prompt Engineering plays a crucial role in harnessing the full potential of LLMs by creating effective prompts that cater to specific business scenarios. This process enables developers to create tailored AI solutions, making AI more accessible and useful to a broader audience.

The challenges we have heard on Prompt Engineering

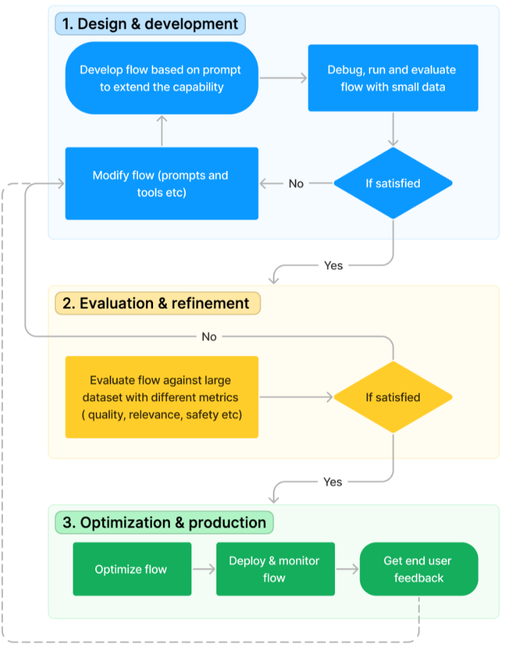

Prompt Engineering, an essential process for generating high-quality content using LLMs, remains an iterative and challenging task. This process involves several steps, including data preparation, crafting tailored prompts, executing prompts using the LLM API, and refining the generated content. These steps form a flow that users iterate on to fine-tune their prompts and achieve the best possible content for their business scenario.

Figure 1 Iterative process for prompt development shows the iterative process for prompt development. While the idealization step is easily achieved with playgrounds provided by LLM service providers, moving forward to get LLM-infused applications into production involves numerous tasks akin to other engineering projects.

Figure 1 Iterative process for prompt development

Prompt Engineering offers significant potential for harnessing the power of LLMs, but it also presents several challenges. Here are some of the main challenges, grouped into three categories:

- Design and development: Users need to understand the LLMs, experiment with different prompts, and use complex logic and control flow to create effective prompts. They also face a cold start problem when they have no prior knowledge or examples to guide them.

- Evaluation & refinement: Users need to ensure that the outputs are consistent, helpful, honest, and harmless, avoiding potential biases and pitfalls. They also need to define and measure prompt quality and effectiveness using standardized metrics.

- Optimization & production: Users need to monitor and troubleshoot prompt issues, compare and improve prompt variants, optimize prompt length without compromising performance, deal with token limitations, and secure their prompts from injection attacks. They also need to collaborate with other developers and ensure stability over large data volume.

Introducing prompt flow

Prompt flow is a powerful feature within Azure Machine Learning (AzureML) that streamlines the development, evaluation, and continuous integration and deployment (CI/CD) of prompt engineering projects. It empowers data scientists and LLM application developers with an interactive experience that combines natural language prompts, templating language, a list of built-in tools and Python code.

Prompt flow offers a range of benefits that help users transition from idealization to experimentation and ultimately to production-ready LLM-infused applications:

- Prompt Engineering agility: users can easily track, reproduce, visualize, compare, evaluate, and improve their prompts and flows with various tools and resources.

- Enterprise readiness for LLM-infused Applications: users can collaborate, deploy, monitor, and secure their flows with Azure Machine Learning’s platform and solutions.

Here are a few highlights of those features to address the pains for Prompt Engineering.

Simplify prompts and flow design and development

You can use a notebook-like programming interface, a Directed Acyclic Graph (DAG) view, and a chat bot experience to create different types of flows. You can also use built-in tools and samples to jump-start your Prompt Engineering projects. Prompt flow guides you through the workflow from authoring, variants tuning, single run for debug, bulk run for test and evaluation, and deploy of the flow. With prompt flow, you can easily and efficiently develop LLM-powered applications for various scenarios.

Figure 2 Interactive development experience – Notebook like experience, DAG view and chat box

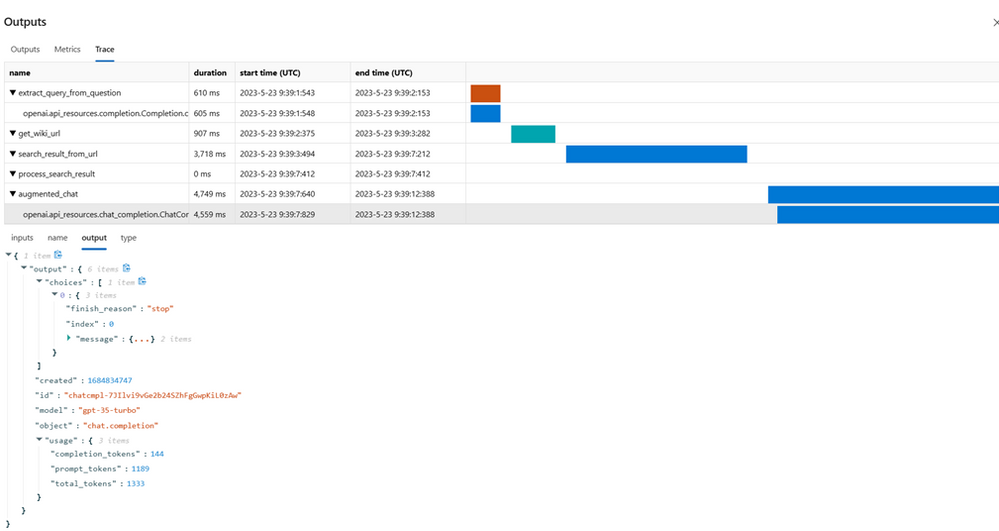

Figure 3 Debug experience with prompt flow

Efficiently tune, evaluate and optimize prompts

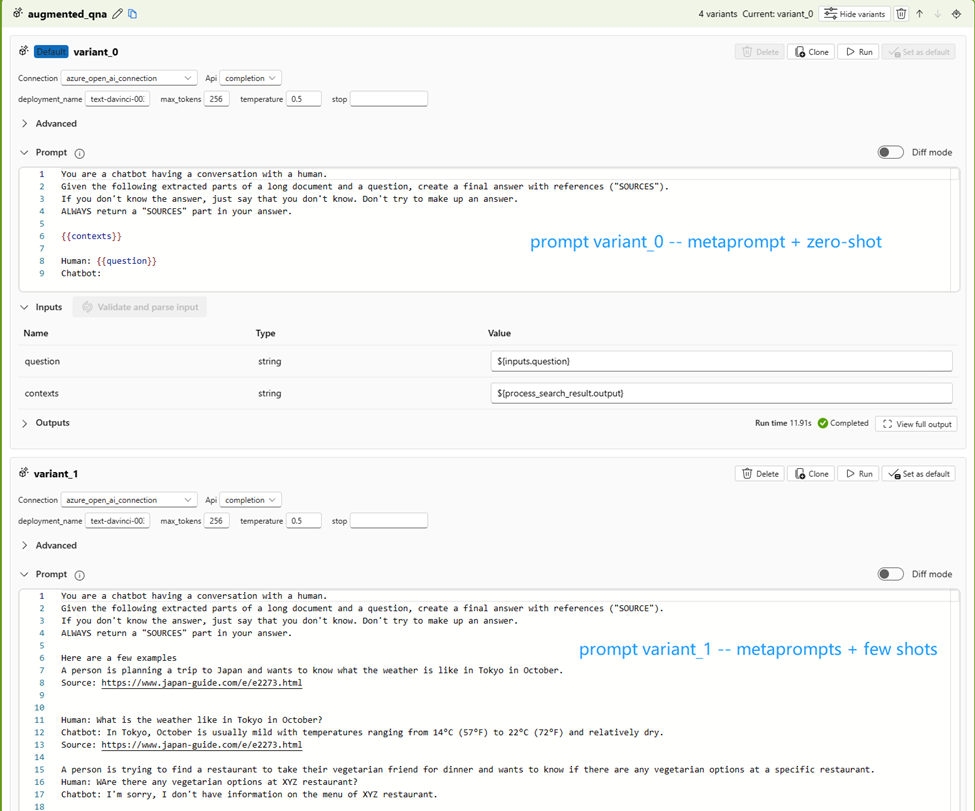

Prompt flow’s variants feature is a powerful tool that enables users to create, run, evaluate, and compare multiple prompt variants with ease. This feature streamlines the exploration and refinement process of prompts, allowing users to iteratively enhance their projects and fine-tune their LLM-infused applications. By offering an intuitive approach to test and compare different prompts, users can rapidly identify the most effective variants, leading to data-driven decisions that optimize the performance and outcomes of their AI applications.

Figure 4 Variants for prompt tuning

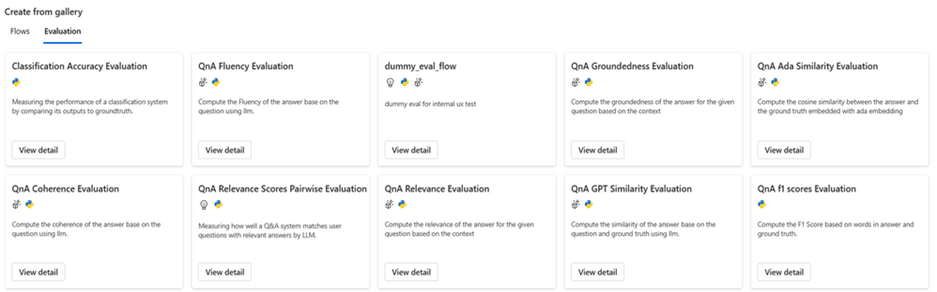

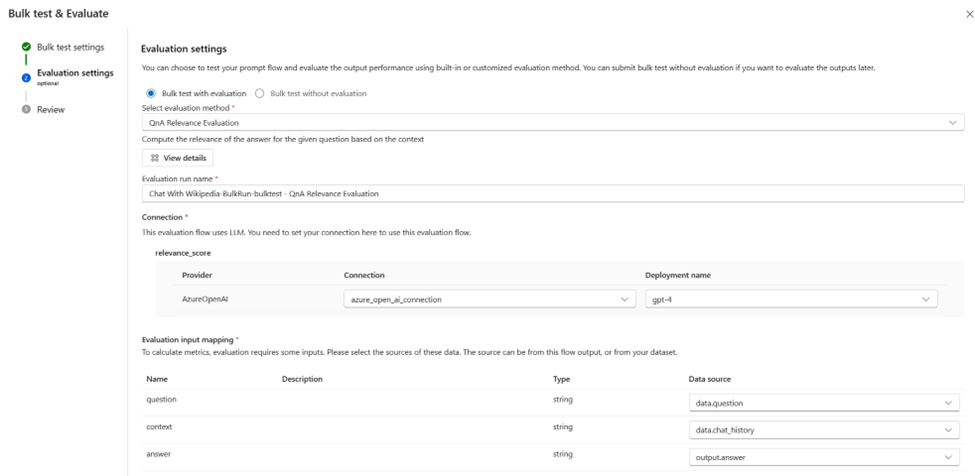

Before transitioning to production, take advantage of prompt flow’s evaluation tools and metrics to thoroughly assess your prompts and flows. The comprehensive evaluation capabilities provided by prompt flow enable users to effectively gauge the quality and performance of their prompts and flows. By setting up custom metrics, you can compare different prompt variants and flows, facilitating data-driven insights that guide decision-making and refine your prompts and flows. This leads to enhanced LLM-infused applications and end-user experiences, ensuring your AI solutions are production-ready and impactful. Prompt flow offers an extensive range of built-in evaluation flows with various metrics that users can directly utilize, or they can create their own tailored evaluation flows based on specific scenarios.

Figure 5 Evaluation flow – built-in and create your own

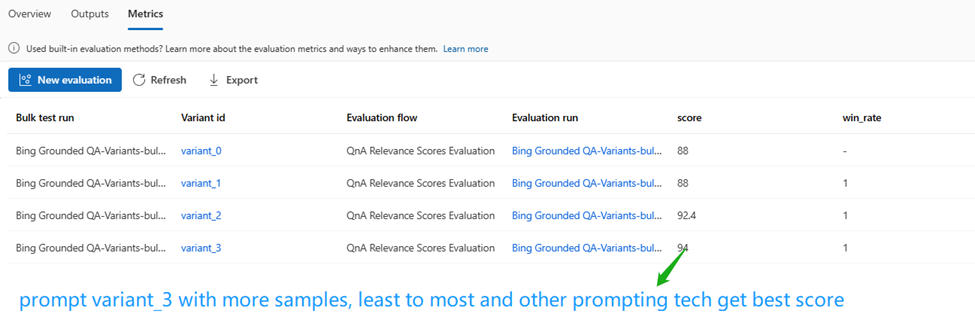

Figure 6, “Testing and evaluating your flow,” demonstrates the process of evaluating your flow during a bulk test, while Figure 7 displays the evaluation results of different variants. These visuals aid users in identifying the most effective prompts for their flow.

Figure 6 Test and evaluate your flow

Figure 7 Compare the metrics for variants in one place

Seamless transition from development to production

After evaluating the flow using various metrics to ensure quality, relevance, safety, and more, the next step is to integrate the developed flow into production for use with existing or new LLM-infused applications. Prompt flow provides a one-click deployment solution for your flow as an enterprise-grade endpoint, along with an interactive GUI for testing the endpoint as a consumer. Additionally, it offers continuous monitoring of endpoints in terms of evaluation metrics, latency, throughput, and more, with alerts to help you continually improve the flow and maintain the SLA of your LLM-infused applications.

Reuse existing prompt assets developed by other frameworks

For users who have already developed prompts and flows using the open-source library, such as LangChain, prompt flow provides a seamless integration pathway. This compatibility enables you to lift and shift your existing assets to prompt flow, facilitating Prompt Engineering, evaluation, and collaboration efforts to prepare your flow for production. This smooth transition ensures that your previous work is not lost and can be further enhanced within the prompt flow environment for evaluation, optimization and production.

Getting started with AzureML prompt flow

Thank you for your interest in Azure Machine Learning prompt flow. We are excited to announce that it is currently available for private preview, and we invite you to join. By signing up AzureML Insiders Program via https://aka.ms/azureMLinsiders, you will gain early access to this new tool and be among the first to experience its benefits.

Conclusion

Prompt flow is a powerful feature that simplifies and streamlines the Prompt Engineering process for LLM-infused applications. It enables users to create, evaluate, and deploy high-quality flows with ease and efficiency. By leveraging the new prompt engineering capabilities built on enterprise-grade Azure Machine Learning services, users can harness the full potential of LLMs and deliver impactful AI solutions for various business scenarios.

Watch the Azure Machine Learning Breakout sessions at Build:

- Build and maintain your company Copilot with Azure ML and GPT-4 (microsoft.com)

- Practical deep dive into machine learning techniques and MLOps (microsoft.com)

- Building and using AI models responsibly (microsoft.com)

Read blogs about new features related to large language models in AzureML: